Azure Data Explorer is a great playground for capturing, transforming and analyzing data. With its straight forward syntax, functions, policies and data connections, it often becomes the central data hub for IoT data projects. In order to govern such a setup with IaC (Infrastructure as Code), terraform seems to be one obvious choice. Since the official Azure Provider (the Azure plugin for Terraform if you will) supports a lot of ADX resources, it seems like a logical step to use it to automate ADX deployments and resources. For us, it became rather “hacky” really fast.

This is why in this blog post, we would like to share the pitfalls we encountered while using Azure Provider and how this community driven provider saved us from a lot of blood, sweat and tears.

Nothing is wrong with azurerm. It offers a lot of great functionality to automate Azure ADX resources like clusters, databases and data connections. For example, after configuring the provider in the terraform manifest with:

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "3.48.0"

}

}

}

provider "azurerm" {

# Configuration options

}an entire ADX cluster can be provisioned with ease:

resource "azurerm_kusto_cluster" "cluster" {

name = "kustocluster"

location = azurerm_resource_group.example.location

resource_group_name = azurerm_resource_group.example.name

sku {

name = "Standard_D13_v2"

capacity = 2

}

}In addition, databases within this cluster can also be created super easily with the official provider:

resource "azurerm_kusto_database" "database" {

name = "my-kusto-database"

resource_group_name = azurerm_resource_group.example.name

location = azurerm_resource_group.example.location

cluster_name = azurerm_kusto_cluster.cluster.name

hot_cache_period = "P7D"

soft_delete_period = "P31D"

}Furthermore, a connection to an event hub, IoT hub, or an event grid can be established or roles for principals can be managed on the database and cluster level. In general, the official provider offers possibilities to manage an ADX cluster on the control plane.

However, an ADX cluster also lives from the content within the databases, the artifacts on the data plane. Tables, materialized views, functions, update policies, ingestion mappings and row-level security are just a few artifacts that make up a functioning cluster. Although strictly speaking, a table or a function is not an “infrastructure” resource, we wanted to manage the whole state of ADX (minus the data, since we do create data backups to blob storage), especially since there are no elegant ways to backup a database with all its structure and functions and policies. For these artifacts in ADX, the azurerm provider offers the possibility to create them via a resource called “Kusto Scripts”.

A Kusto Script is an ADX functionality. It is a text script that you can register in your ADX cluster and run. Within this script you can issue any number of commands that are available in KQL (Kusto Query Language). This script can be provided directly or via link to a text file in Azure Blob Store.

That sounds great doesn’t it? Everything can be scripted according to the individual needs. For example:

resource "azurerm_kusto_script" "example" {

name = "example"

database_id = azurerm_kusto_database.example.id

continue_on_errors_enabled = true

script_content = ".create table Test1 (Name:string,String:string,Counter:int) with (folder = "my/folder")"

}creates a table and you can issue as many statements in the same script as you want.

But here the trouble begins:

Only 50 such Kusto Scripts can be defined per ADX cluster. That is (as of 3/2023) a hard limit that cannot be changed. This means that for large production systems with a lot of different projects using the same cluster, it becomes a bootleneck soon and will force each project to jam all issues into one file, resulting in really unpleasant code while concatenating everything within terraform. In our case we had several hundred table-, Materialized-view- and policy-commands in one script. It worked, but it made maintenance really difficult.

An even bigger issue is the statefulness of the kusto script: Since the kusto script is treated as one terraform resource, it cannot handle changes within the script in a stateful manner. It can detect changes and run the script again, but it will never detect and destroy obsolete resources.

But this is still not the most unconvenient shortcoming when using Kusto script. The worst thing happens, whenever a script is running into errors. As you can see above, there exists a flag called “continue_on_errors_enabled ” which lets you control whether the script should skip erroneous lines or break the execution. At the same time, whenever the script runs into a syntax error, Terraform removes it from its own state. This means that Terraform “forgets” that it is managing the script, resulting in an terraform apply plan and apply fail during the next run. This results in the absolute inconvenience highlight. In order to get terraform to control the script again, the original script needs to be deleted from ADX. But how can I do that? In the ADX UI, there is no link guiding you to the right page in order to delete the script. In order to be able to delete that script (without using the api), we constructed the links to our Kusto Scripts in the following manner:

portal.azure.com/#@<ourcompany>.onmicrosoft.com/resource/subscriptions/<oursubscription>/resourceGroups/<adxresourcegroup>/providers/Microsoft.Kusto/Clusters/<clustername>/Databases/<dbname>/Scripts/<scriptnameasdefinedinTF>/overviewUsing such a constructed link will lead you to the juicy delete button to get rid of the script.

Surely there are other ways to get around these shortcomings, like using the api or terraform destroy in order to get rid of the scripts after execution. However this usually interferes with safeguards such as locks.

In order to solve our issues, we switched from azurerm provider to this ADX provider.

This provider was built to easily manage artifacts in ADX and perform CRUD operations. However, it is not intended to work on the control plane like granting permissions at the cluster or database level or create connections with event hubs.

To use the ADX-Provider in your own project, it must be installed in the same way as the official provider:

terraform {

required_providers {

adx = {

source = "favoretti/adx"

version = "0.0.21"

}

}

}

provider "adx" {

# adx_endpoint = "..."

# client_id = "..."

# client_secret = "..."

# tenant_id = "..."

}Now, artifacts like a table can be deployed to ADX.

resource "adx_table" "test" {

name = "Test1"

database_name = "test-db"

table_schema = "Name:string,String:string,Counter:int"

folder = "my/folder"

docstring = "This describes the entity"

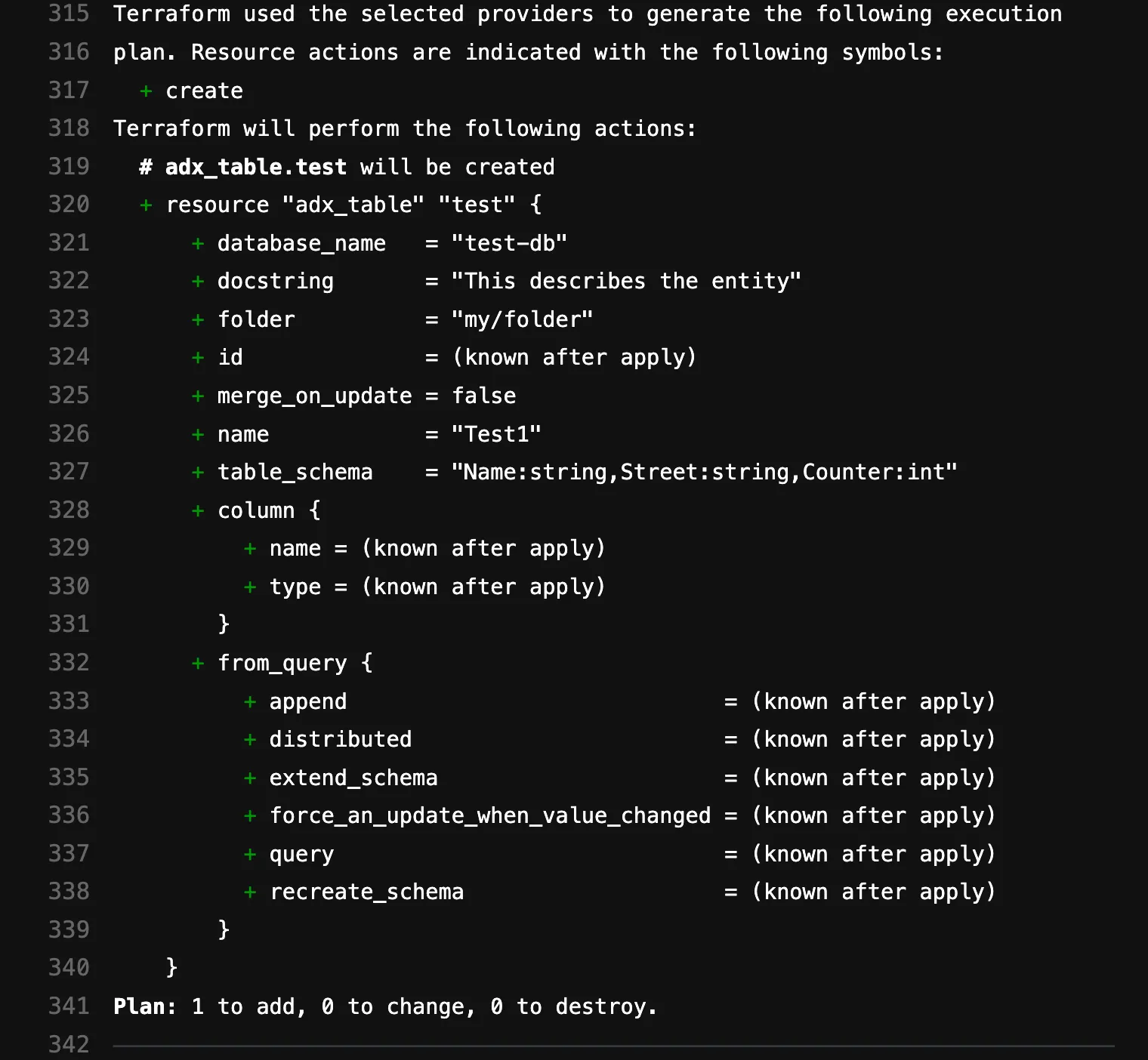

}If we now execute terraform plan first, we see something like this:

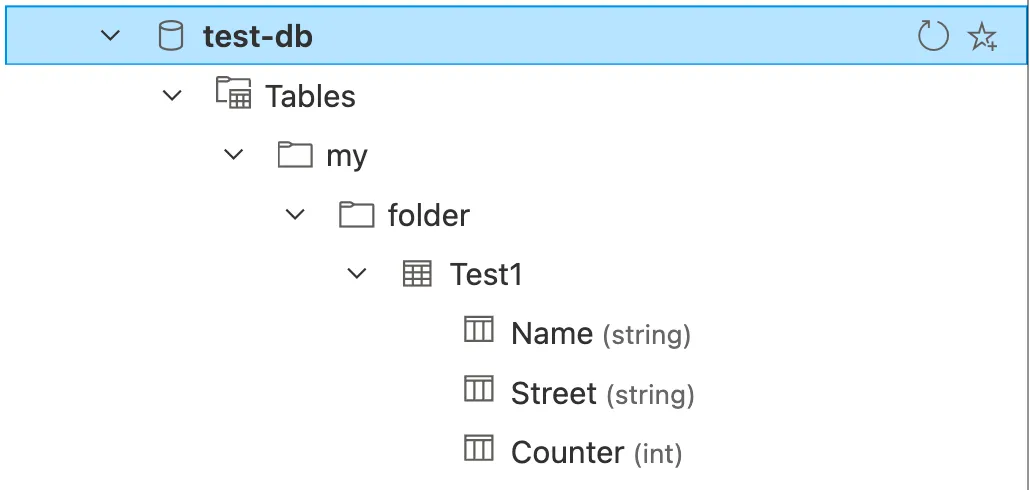

A table with the corresponding schema was deployed in the specified folder.

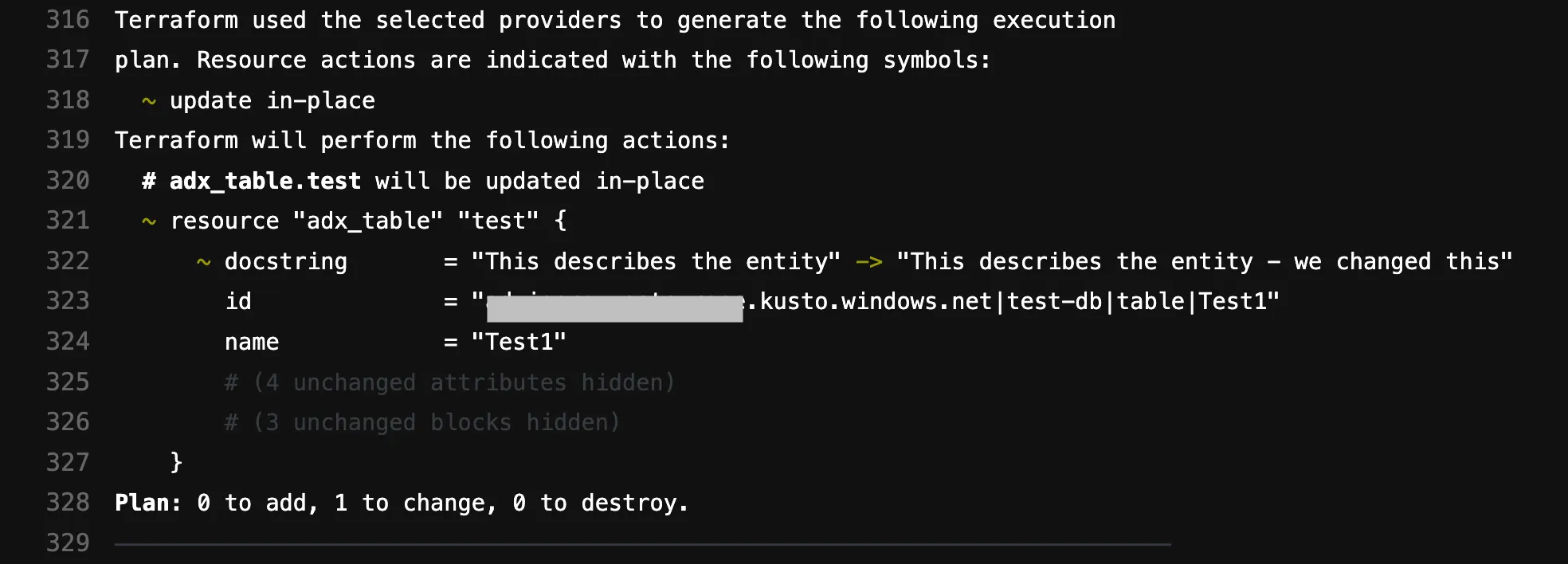

This looks great. Now let us change a property of the table to check if we can update a ressource with this provider.

We change the docstring parameter:

resource "adx_table" "test" {

name = "Test1"

database_name = "test-db"

table_schema = "Name:string,String:string,Counter:int"

folder = "my/folder"

docstring = "This describes the entity - we changed this"

}Then, after executing terraform plan, we will see:

This will look familiar to you if you’ve worked with other Terraform providers. Only the change is visible in the output. Sweet and clean.

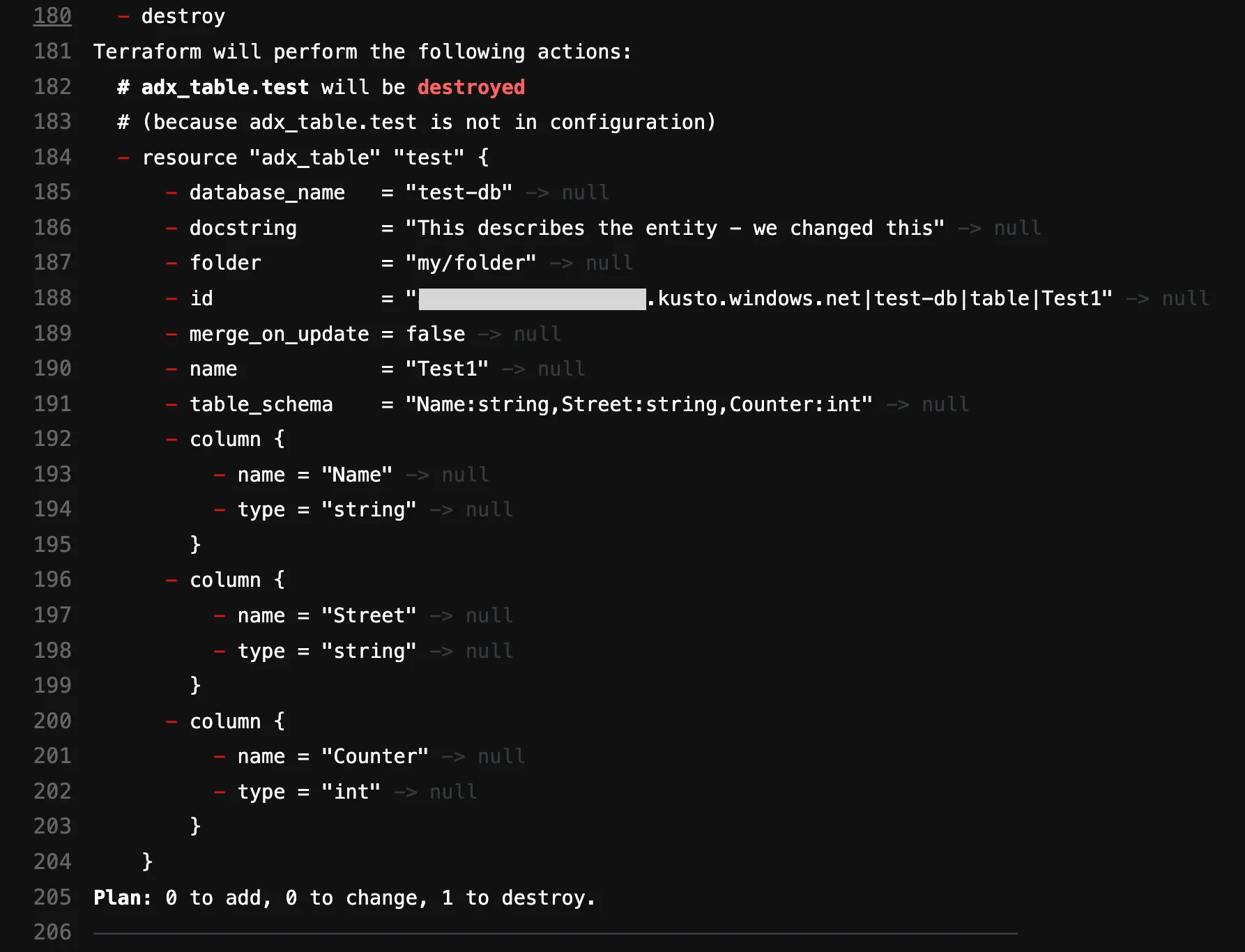

Good. We have come very far. Let us face the final boss and try to delete this artifact within ADX by removing the entire resource block in the code. We can throw the lines in the garbage or just comment them out (like we did).

#resource "adx_table" "test" {

# name = "Test1"

# database_name = "test-db"

# table_schema = "Name:string,String:string,Counter:int"

# folder = "my/folder"

# docstring = "This describes the entity - we changed this"

#}In the best case it will destroy the table, so fingers crossed. When we now build our terraform plan again we will see:

Buckets! With this plan in our pocket we can easily apply this to remove the table in the ADX cluster database.

This is it. In this way, we now have the ability to manage materialized views, caching policies, row-level security, ingestion mappings, and many other artifacts. You should definitely check out the documentation of this ADX provider and try this juicy thing by yourself. We bet you will love it.

If you dive deeper into the ADX provider code base you will recognize really fast that is was written using the Terraform Plugin SDK v2 (link) with the azure-kusto-go module under the hood to translate Terraform resources into Kusto commands. Since the provider is open source, everyone is welcome to contribute to its development.

That is all for now. Happy coding.