k6 is written in Golang and exposes a JavaScript based load testing framework. We often use it to test our components. k6 makes it a lot easier to test whole components in black box testing and of course, as the name suggests, it allows you to easily load test your components to analyze how these behave under pressure.

Recently, I had to test a streaming based component without any other API than their access to Azure Event Hub. So, how do you test these components? Well, you could just connect to your DEV environment and push messages to Azure Event Hub. But, wait… how do you

just push messages to event hub?

In my case, as we wanted to do load tests of our whole application, I decided to use k6 for ingesting messages to Azure Event Hub. However, as there is no “official” interface to Azure Event Hub, yet, I had to use the Apache Kafka k6 extension.

First, you have to install k6, which is pretty straight-forward given the docs.

Next, we have to install the Kafka adapter. The process here is as follows:

go install go.k6.io/xk6/cmd/xk6@latestxk6 build --with github.com/mostafa/xk6-kafka@latestYes, you have read correctly. We are creating a new executable in step 3, which will then be located in the folder we executed the last command from. K6 executes JS with Golang and therefore extensions need to be written in go and bring the requested interfaces, such that k6 may generate JS Bindings from it. Thus, make sure to use the freshly build k6 executable and not the default one you might have installed.

Now, we are all settled for running our tests against Azure Event Hub. Let’s get started.

Start your Azure Event Hub instance and create a topic.

First, let’s add the following import statements to our script. We are going to need them later:

import {

Writer,

Connection,

SchemaRegistry,

CODEC_SNAPPY,

SCHEMA_TYPE_JSON,

SASL_PLAIN,

TLS_1_2,

} from 'k6/x/kafka'; // import kafka extensionIn order to connect to Azure Event Hub, we are going to need the connection string. We can use the connection string as well to get the URL to the event hub, such that we only need to configure this variable.

As k6 does not support env vars we need to pass these via the -e <ENV-VAR> option to the execution of the script later. So, let us have a look at the first const assignments to load data.

const brokers = [

__ENV.EHUB_CONNECTION_STRING.split('//')[1].split('/')[0] + ':9093',

];

const topic = __ENV.EHUB_TOPIC_NAME;

const connection_string = __ENV.EHUB_CONNECTION_STRING;In k6 we can only load these passed environment variables via __ENV.<var_name> where <var_name> is your variable’s name.

Here we are defining several variables. As Kafka does have brokers, we need to set an appropriate one for Azure Event Hub. We utilize the connection string for this and extract the URL from it. The Azure Event Hub port for the Kafka interface is 9093. Additionally, we pass the topic name for the Event Hub.

Next, we need to configure SASL for accessing Event Hub and TLS:

const saslConfig = {

username: '$ConnectionString',

password: connection_string,

algorithm: SASL_PLAIN,

};

const tlsConfig = {

enableTls: true,

insecureSkipTlsVerify: false,

minVersion: TLS_1_2,

};

const writer = new Writer({

brokers: brokers,

topic: topic,

sasl: saslConfig,

tls: tlsConfig,

autoCreateTopic: false,

});

const schemaRegistry = new SchemaRegistry();As you can see the login for the Kafka interface in Azure Event Hub is the user $ConnectionString combined with the whole connection string as a password. As algorithm we are using SASL_PLAIN.

Then, TLS is enabled, which is mandatory for connections to Azure Event Hub.

Finally, we are creating a writer to write messages to Azure Event Hub and a schema registry.

The last piece of our code is to send messages to Azure Event Hub. We do so, by executing

writer.produce({ messages: messages }); where we can pass multiple messages at once.

This code, as always in k6, has to be put into the default function, we are exporting:

export default function () {

let messages = [

{

key: schemaRegistry.serialize({

data: {

correlationId: 'correlationId',

},

schemaType: SCHEMA_TYPE_JSON,

}),

// We are sending JSON data here

value: schemaRegistry.serialize({

data: fileContent, //Adapt fileContent to your needs, where you put your content

schemaType: SCHEMA_TYPE_JSON,

}),

headers: {

headerkey: 'headervalue',

},

time: new Date(), // Will be converted to timestamp automatically

},

];

writer.produce({ messages: messages });

}This works. Great! However, ideally we want to manipulate messages or send predefined messages with some minor adjustments. So, let us load some JSON files and send them as messages to Kafka.

Unfortunately, this is not so easy. As mentioned, k6 is written in Golang and just exposes a JS interface. This means, it does not run in NodeJS. Therefore, it also does not support the fs module. So, here is my workaround for this, as proposed in this github issue.

Let us create a simple file with filenames.

["json/file1.json", "json/file2.json", "json/file3.json", "json/file4.json"]Next, we need to load these in our script such as:

const fileContents = [];

const filePaths = JSON.parse(open('filenames.json'));

filePaths.forEach((fileName) => fileContents.push(open(fileName)));So, now in fileContents we have got the content of each of the different files. Let us send them to Azure Event Hub now.

export default function () {

for (let index = 0; index < fileContents.length; index++) {

//Update timestamp to current to force updates on DB.

const fileContent = JSON.parse(fileContents[index]);

fileContent.timestamp = new Date().toISOString();

let messages = [

{

//[...same as above]

// The data type of the value is JSON

value: schemaRegistry.serialize({

data: fileContent,

schemaType: SCHEMA_TYPE_JSON,

}),

//[...same as above]

},

];

writer.produce({ messages: messages });

}

}Alternatively, you can of course also create the array first and not send messages individually, as I do here.

Well and that is it. Now, we just need to run k6 (from our locally created exe) and inspect how many entries get ingested.

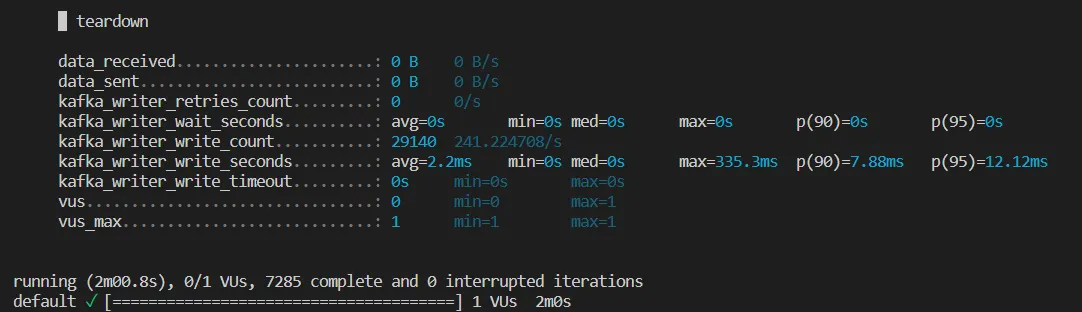

./k6 run -e EHUB_CONNECTION_STRING=<eventhub_connection_string> -e EHUB_TOPIC_NAME=<eventhub_topic_name> --duration 2m k6_eventhub_test.jsWith such a small load test snippet and 2 minutes of the test, I was able to ingest 29140 messages into the queue. Of course, in order to appropriately monitor performance of Azure Event Hub consuming applications, we would need to monitor these separately, i.e. via prometheus. However, this gives a good first starter for easy test data generation and performance analysis.